ctlr+r #02: How to not get stupid & The Parquet Killer?

A weekly recall from the terminal of my mind: Thoughts 🧠, 🛠 Tools, and 📕 Takes.

🧠 The LLM paradox: preserving critical thinking

This has been on my mind for months. With LLMs at our fingertips, we risk becoming lazy thinkers. We ask a question, get the answer, and copy-paste...often without understanding the “how” or engaging our own brains first. It feels like our short and long-term memory is taking a hit.

Do you actually remember most of the answers you get from LLMs?

That said, AI is a powerful learning tool. Harvard has an internal AI chat for their CS50 students. It used to just “quack” (seriously, based on rubber duck debugging technique), but now it speaks English with what they call “pedagogical guardrails.”

Instead of just giving answers, it nudges students to think critically, ask questions, and work their way toward the solution.

Anthropic also announced Claude for Education back in April 2025 (aka “learning mode”). It’s not publicly available yet, but I suspect it follows the same Socratic approach.

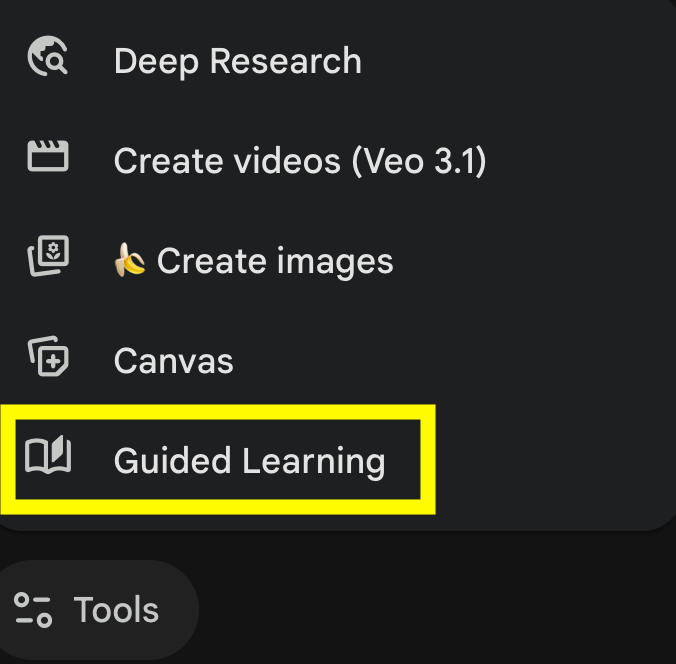

I tried Gemini’s “Guided learning” announced in August 2025, but I was a bit disappointed.

I asked, “Can you explain to me what Apache Kafka is and how it works?” It jumped straight into a technical answer without assessing my background or the level of depth I wanted. While it did eventually ask a follow-up , eg : “What part of the Kafka system would you like to explore next?”.

I feel there is definitely space to improve how AI scaffolds learning rather than just retrieving facts.

🛠 Vortex: file format rethought for our era

Parquet is legendary, but it was built for a different hardware era : spinning disks, HDFS, and large sequential scans. Today, we read data from fast NVMe and S3, mixing analytics with ML workloads that require quick, selective access.

Vortex rethinks the layout for this modern environment: it aligns directly with Arrow’s in-memory format, ditches Parquet’s heavy row-group structure, and uses flexible encodings. The result is 10–20× faster table scans and up to 100× faster random access, without sacrificing compression. For data engineers building retrieval-heavy systems, Vortex reduces I/O and CPU overhead while keeping the familiar columnar model.

For any new standard, adoption is key. Vortex already supports: Arrow, DataFusion, DuckDB, Spark, Pandas, Polars, and Apache Iceberg is coming soon (!).

It was recently added as a core extension in DuckDB, so I gave it a spin against a ~1GB Parquet file. The results were mixed: Vortex was faster, but not crazy faster, and the file size was only 3% smaller. I need to do more rigorous testing, but it looks promising!

📚 What I Read / Watched

Agent Design Is Still Hard: Armin Ronacher (creator of Flask) shares gems on building agents with pragmatic takeaways. A must-read if you are building an agent!

Librepods: Someone reverse-engineered Apple’s protocol to unlock all AirPod features on Android. Note: you need a rooted phone unless you are on Oppo/OnePlus.

Post-mortem of Cloudflare’s outage: The “Internet was down” again thanks to Cloudflare. Hats off to them for the detailed post-mortem so quickly. The root cause was a change to ClickHouse permissions that made a metadata query return duplicates, generating a bad config file. Insane how small issues can escalate to the world’s eye.

Fixing standup the only way I know how: Dreams of Code tries to automate stand-ups using n8n. TBH, might be over-engineered, but definitely an interesting attempt to solve the “problem” of the standup. Comments are interesting too.

I was speaking at the Forward Data conference today in Paris and the vibe was great!

Here’s me spreading the Ducklife (made with duckify.ai)