Your Next Container Strategy: From Development to Deployment

Learn how to manage dockerfiles and version through a working Python API project

Learn how to manage dockerfiles and version through a working Python API project

In today's development world, containers are everywhere. It's a practical way to test things locally and be able to deploy it on any cloud service, as long as it supports a container image. It's easy however to fall into the hell of dockerfile(s) and versions.

I'll provide in this guide a clear strategy with an example of how to set up your container strategy from development to deployment with an awesome Makefile. We will take a python API project deployed on Cloud Run as a case study and you will have a full working code example to play with.

The strategy 🗺

The goal is to have little to no difference between the development environment (Docker Desktop), the CI processes (e.g Github Action, for testing/deployment), and the application runtime (GCP Cloud Run).

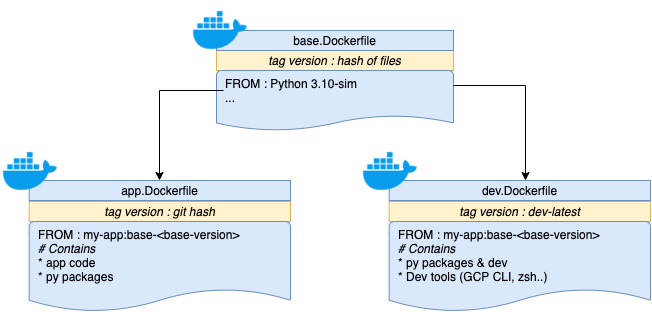

In order to do so, we will separate them into multiple Dockerfile. This brings several advantages :

Not keeping the Dockerfile too big

Being able to hash a Dockerfile to detect a change and use it as a container version tag

We will make use of a powerful Makefile that would help to be a single entry-point for both development and CI.

Base container

What's inside?

This is basically the root of all other containers (here development/CI and application). This is where you usually define which OS you are going to use and put the baseline for the programming language.

In practice here, we are going to put :

Debian 10

Python 3.9

A few Debian packages like curl, git, etc.

Sometimes your company may have a base image that they want you to use to avoid too much disparity in the Linux OS distribution and better security patches management.

Here, we will use directly an image from the official python repo.

How to manage the version?

A simple strategy is to do a md5 hash of a list of files or folders. That way, we are sure that the version we generated is tight to the content of the code and it's unique. Such a script will look like this.

Depending on the docker layer, we will use the same script. Getting the latest version of the dev layer would be : ./version.sh dev

If you have any scripts or files that you use within that base image (for example: creating a custom Linux user), feel free to add them to the hash to keep track of these changes!

Application container

What's inside?

Everything includes in the base image

Code

Py packages

What would define the version?

It can be a simple hash. We use here a git hash to hash the whole directory.

Development container 🏗

Our strategy is to leverage the devcontainer feature from VSCode so that we can have a fully isolated development environment built on the base image.

What’s inside?

Everything included from the base image

No code (as it will be a mounted volume so that we can have hot reload while developing)

CLI tools (GCP CLI)

Py packages (and dev dependencies)

What would define the version?

No need to version this one unless you want to speed up the built time and push the image on a remote docker registry.

Packaging everything using Makefile

The only requirements to develop/build/test/deploy are only and makeand docker!

We will have a single command to build with a DOCKER_LAYER parameter that can be: base, app, dev. We can dynamically get the latest version and try either to pull from a remote registry or build if not exist.

To respect the dependency (base → dev and base → app) between docker layers, we can add a simple if/else case so that the base layer is always built upfront no matter which layer we are requesting.

Example :

make get-img DOCKER_LAYER=dev

will pull or build first the latest version of base docker image.

Make target get-img looks like this :

Automating tests & deployment with the CI

Now that all our actions are containerized, our CI actions will be pretty simple. Apart from a dedicated way of authentification to the Cloud service, everything else is just a make command.

Notice that we push the images (base, dev) when preparing the CI images. This will speed up the CI process when doing development on branches (if there's no change in the base image).

Conclusion

With this example, you have a good overview of how to containerize your next project from development to deployment!

Having a good consistency on the base image from the different layers will help you to move faster when you need to upgrade or test different versions of your dependencies.

A lot of things can be easily adapted or extended depending on your needs and the tradeoff that you want to make.

The full code of the project can be found here.

Happy Coding!

Want to Connect With the Author?Follow me on 🎥 Youtube,🔗 LinkedIn